A couple of conversations with data leaders have reminded me of the data wrangling challenges that a number of you are still facing.

Despite the amount of media coverage for deep learning and other more advanced techniques, most data science teams are still struggling with more basic data problems.

Even well-established analytics teams can still lack the single customer view, easily accessible

data lake or analytical playpen that they need for their work.

Insight leaders also regularly express frustration that they and their teams are still bogged down in data

fire fighting’, rather than getting to analytical work that could be transformative.

Part of the problem may be lack of focus. Data and data management are often still considered the least sexy part of customer insight or data science. All too often, leaders lack clear data plans, models or strategy to develop the data ecosystem (including infrastructure) that will enable all other work by the team.

Back in 2015, we conducted

a poll of leaders, asking about use of data models and metadata. Shockingly, none of those surveyed had conceptual data models in place, and half also lacked logical data models. Exacerbating this lack of a clear, technology-independent understanding of your data, all leaders surveyed cited a lack of effective

metadata. Without these tools in place, data management is in danger of considerable rework and feeling like a DIY, best-endeavors frustration.

See also: Next Step: Merging Big Data and AI

So, what are the common data problems I hear, when meeting data leaders across the country? Here is one that crops up most often:

Too much time taken up on data prep

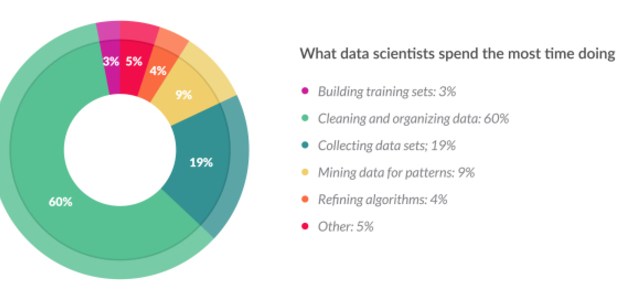

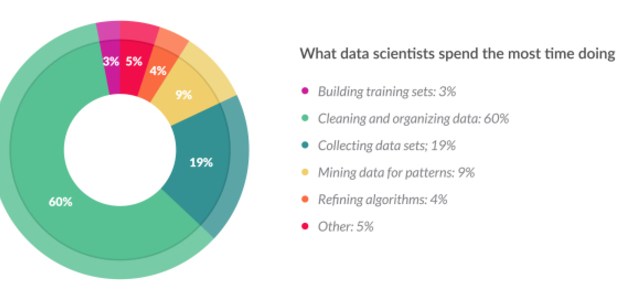

I was reminded of this often-cited challenge by a post on LinkedIn from

Martin Squires, experienced leader of Boot’s insight team. Sharing a post originally published in Forbes magazine, Martin reflected how little has changed in 20 years. This survey shows that, just as Martin and I found 20 years ago, more than 60% of data scientists' time is taken up with cleaning and organizing data

The problem might now have new names, like d

ata wrangling or d

ata munging, but the problem remains the same. From my own experience of leading teams, this problem will not be resolved by just waiting for the next generation of tools. Instead, insight leaders need to face the problem and resolve such a waste of highly skilled analyst time.

Here are some common reasons that the problem has proved intractable:

- Underinvestment in technology whose benefit is not seen outside of analytics teams (data lakes/ETL software)

- Lack of transparency to internal customers as to amount of time taken up in data prep (inadequate briefing process)

- Lack of consequences for IT or internal customers if situation is allowed to continue (share the pain)

On that last point, I want to reiterate advice given to

coaching clients. Ask yourself honestly, are you your own worst enemy by

keeping the show on the road despite these data barriers? Have you ever considered letting a piece of work or regular job fail, to highlight technology problems that your team are currently masking by manual workarounds? It’s worth considering as a tactic.

Beyond that more radical approach, what can data leaders do to overcome these problems and achieve delivery of successful data projects to reduce the data wrangling workload? Here are

three tips that I hope help set you on the right path.

Create a playpen to enable play to prioritize data needed

Here, once again, language can confuse or divide. Whether one talks about

data lakes or, less impressively,

playpens or

sandpits within a server or data warehouse — common benefits can be realized.

More than a decade working across IT roles, followed by leading data projects from the business side, taught me that one of the biggest causes of delay and mistakes was data mapping work. The arduous task of accurately mapping all the data required by a business, from source systems through any required ETL (extract transform and load) layers, on to the analytics database solution is fraught with problems.

All too often this is the biggest cost and cause of delays or rework for data projects. Frustratingly, for those who do audit usage afterward, one can find that not all the data loaded is actually used. So, after frustration for both IT and insight teams, only a subset of the data really added value.

This is where a free-format data lake or playpen can really add value. They should be used to enable IT to dump data there with minimal effort, or for insight teams to access potential data sources for one-off extracts to the playpen. Here, analysts or data scientists can have opportunity to play with the data. However, this capability is far more valuable than that sounds. Better language is perhaps "

data lab’." Here, the business experts have the opportunity to try use of different potential data feeds and variables within them and to learn which are actually useful/predictive/used for analysis or modeling that will add value.

The great benefit of this approach is to enable a lower cost and more flexible way of de-scoping the data variables and data feeds actually required in live systems. Reducing those can radically increase the speed of delivery for new data warehouses or releases of changes/upgrades.

Recruit and develop data specialist roles outside of IT

The approach proposed above, together with innumerable change projects across today’s businesses, need to be informed by someone who knows what each data item means. That may sound obvious, but too few businesses have clear knowledge management or career development strategies to meet that need.

Decades ago, small IT teams contained long serving experts who had built all the systems used and were actively involved with fixing any data issues that arose. If they were also sufficiently knowledgeable about the business and how each data item was used by different teams, they could potentially provide the data expertise I propose. However, those days have long gone.

Most corporate IT teams are now closer to the proverbial baked bean factory. They may have the experience and skills needed to deliver the data infrastructure. But they lack any depth of understanding of the data items (or blood) that flows through those arteries. If the data needs of analysts or data scientists are to be met, they need to be able to talk with experts in data models, data quality and metadata, to discuss what analysts are seeking to understand or model in the real world of a customer and translate that into the most accurate and accessible proxy within data variables available.

So, I recommend insight leaders seriously consider the benefit of

in-house data management teams, with real specialization in understanding data and curating it to meet team needs. We’ve previously posted some

hints for getting the best out of these teams.

Grow incrementally, delivering value each time, to justify investment

I’m sure all change leaders and most insight leaders have heard the advice on how to eat an elephant or deliver major change. That rubric, to deliver one bite at a time, is as true as ever.

Although it can help for an insight leader to take time out,

step back and consider all the data needs/gaps – leaders also need to be pragmatic about the best approach to deliver on those needs. Using the data lake approach and data specialists mentioned above, time should be taken to prioritize data requirements.

See also: Why to Refocus on Data and Analytics

Investigating data requirements to be able to score each against both potential business value and ease of implementation (classic Boston Consulting grid style), can help with scoping decisions. But I’d also counsel against just selecting randomly the most promising and easiest to access variables.

Instead, think in terms of

use cases. Most successful insight teams have grown incrementally, by

proving the value they can add to a business one application at a time. So, dimensions like the different urgency + importance of business problems come into play, as well.

For your first iteration of a

project to invest in extra data, then prove value to business to secure budget for next wave – look for the following characteristics:

- Analysis using data lake/playpen has shown potential

- Relatively easy to access data and not too many variables (in the quick win category for IT team)

- Important business problem that is widely seen as a current priority to fix (with rapid impact able to be measured)

- Good stakeholder relationship with business leader in application area (current or potential advocate)

How is your data wrangling going?

Do your analysts spend too much time hunting down the right data and then corralling it into the form needed for required analysis? Have you overcome the time burned by data prep? If so, what has worked for you and your team?

We would love to hear of leadership approaches/decisions, software or processes that you have found helpful. Why not share them here, so other insight leaders can also improve practice in this area?

Let’s not wait another 20 years to stop the data wrangling drain. There is too much potentially valuable insight or data science work to be done.

The problem might now have new names, like data wrangling or data munging, but the problem remains the same. From my own experience of leading teams, this problem will not be resolved by just waiting for the next generation of tools. Instead, insight leaders need to face the problem and resolve such a waste of highly skilled analyst time.

Here are some common reasons that the problem has proved intractable:

The problem might now have new names, like data wrangling or data munging, but the problem remains the same. From my own experience of leading teams, this problem will not be resolved by just waiting for the next generation of tools. Instead, insight leaders need to face the problem and resolve such a waste of highly skilled analyst time.

Here are some common reasons that the problem has proved intractable: